- Pondhouse Data OG - We know data & AI

- Posts

- Pondhouse Data AI - Tips & Tutorials for Data & AI 23

Pondhouse Data AI - Tips & Tutorials for Data & AI 23

OpenAI Joins MCP De Facto Standard | Stunning Images with GPT-4o | Langfuse for AI Debugging | OpenWebUI with massive Update

Hey there,

This week’s newsletter is packed with updates that push practical AI forward. We’re highlighting OpenWebUI’s big new release, which makes local AI interfaces more powerful and flexible than ever. You'll also learn how to get started with MCP, now quickly becoming the de facto standard for connecting LLMs to real tools and data.

Plus, we’re diving into OpenAI’s latest moves: image generation with GPT-4o is now live, and their surprise adoption of Anthropic’s MCP protocol is shaking up the ecosystem.

As always, we’ve got one tool and one tip to help you build better AI—faster.

Enjoy the read!

Cheers, Andreas & Sascha

In today's edition:

📚 Tutorial of the Week: How OpenWebUI Just Got Even Better – New Features + Our Setup Guides

🛠️ Tool Spotlight: Langfuse – The Open-Source Tracing & Debugging Tool for LLM Apps

📰 Top News: GPT-4o Image Generation Rolls Out | OpenAI Embraces MCP as Industry Standard

💡 Tips: How to Use MCP – The Now-De Facto Standard for AI Tool Integration

Let's get started!

Find this Newsletter helpful?

Please forward it to your colleagues and friends - it helps us tremendously.

Tutorial of the week

OpenWebUI Got a Big Upgrade — Here’s How to Use It

This week, we’re spotlighting a previously published tutorial series that’s more relevant than ever. Why? Because OpenWebUI just rolled out one of its biggest updates yet, bringing powerful new features and major improvements that make it a top choice for anyone running local LLMs or building AI-powered assistants.

🔄 What’s New in the Latest OpenWebUI Release?

According to the latest release notes, OpenWebUI has introduced a long list of enhancements:

Multi-user support with per-user chat separation

Improved integrations with Ollama and LM Studio

A revamped file upload system for better context management

Dynamic system prompts and advanced memory features

A smoother, faster frontend with improved UI responsiveness

These changes make OpenWebUI not just a frontend for chatting with local models, but a flexible control center for working with LLMs in production-like environments.

💡 Already Published, Now More Useful Than Ever

To help you take advantage of this, we’re resurfacing two tutorials we previously published:

📘 Introduction to OpenWebUI 🔗

Learn how to set up and use OpenWebUI, what it can do out of the box, and how to connect it to local models.🔧 Integrating OpenWebUI with n8n 🔗

A hands-on guide for connecting OpenWebUI to workflows using the powerful n8n automation platform.

Whether you’re already using OpenWebUI or just starting out, now’s the perfect time to explore its full potential. This latest update turns it into an even stronger option for running fast, private, and flexible AI assistants on your own terms.

Tool of the week

Langfuse – Observability for LLMs Made Simple

As LLM-powered applications grow more complex, understanding what’s going on inside your agents and pipelines becomes more than a nice-to-have—it’s essential. That’s where Langfuse comes in.

Langfuse is an open-source observability platform built specifically for LLM-based applications. It helps you trace, log, debug, and evaluate everything from single prompts to full multi-step agents. Whether you're working with LangChain, custom pipelines, or tools like OpenAI and Anthropic APIs, Langfuse gives you visibility into every step of your system.

🔍 Key Features:

Detailed Tracing & Logging: Visualize each step in your prompt/agent flow, including tool calls, retries, intermediate outputs, and model responses.

Custom Evaluations: Add your own test logic, metrics, or even LLM-generated evaluations to understand quality and correctness.

Flexible Integration: Works with many LLM frameworks out of the box and offers full API access for custom setups.

Live Debugging: Step through sessions and identify where your agents fail, loop, or return wrong answers.

Langfuse is especially useful for multi-step agents and RAG pipelines, where one failing or slow component can tank the entire user experience. Their recent blog post on agent observability walks through exactly how Langfuse helps teams monitor performance, catch bugs, and continuously improve their agents.

Langfuse is open source and available on GitHub:

🔗 Langfuse GitHub Repository

Documentation and setup guides:

🔗 Langfuse Docs

Whether you're running a solo side project or a production-grade AI assistant, Langfuse makes it easier to ship, scale, and trust your LLM-powered apps.

Top News of the week

OpenAI Releases GPT-4o Image Generation and Embraces Anthropic’s MCP Standard

OpenAI made two big moves that show how fast the ecosystem is evolving—both in what models can do and how they connect to the rest of the world.

First, the new GPT-4o image generation feature is now rolling out. It produces photorealistic, high-quality images directly inside ChatGPT, and it’s fast. This upgrade puts OpenAI’s visual capabilities on par with the best in the field—available to Plus, Pro, and Free users, with Enterprise and Edu support coming soon. 🔗 See the announcement

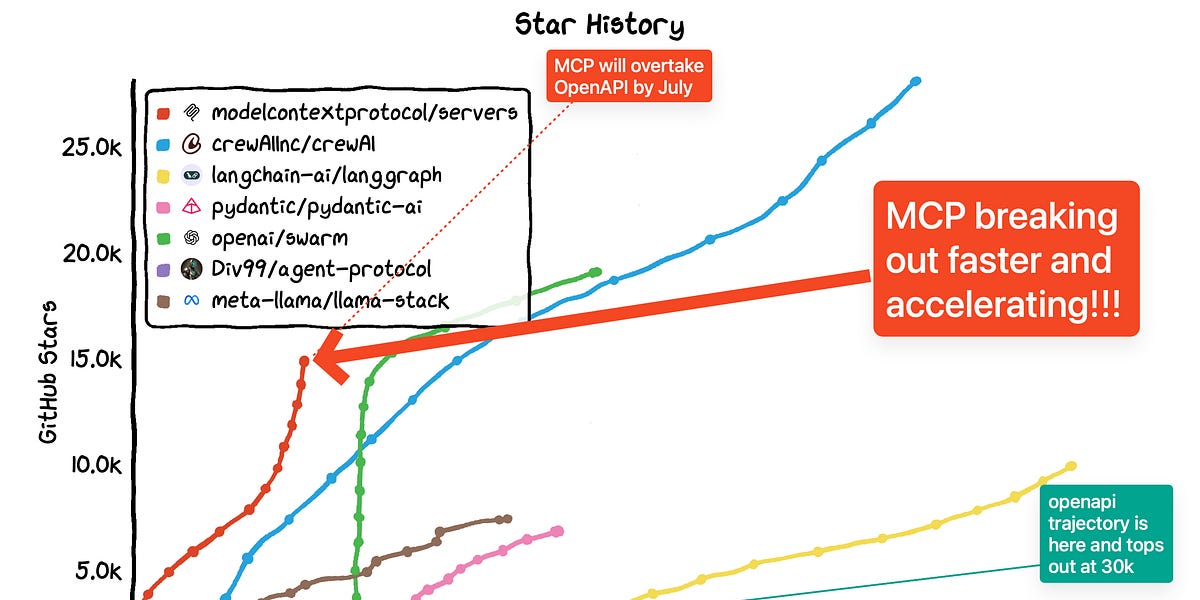

Second, OpenAI is now adopting the Model Context Protocol (MCP)—an open standard introduced by Anthropic to help AI systems connect to external tools and data sources. The protocol allows apps like ChatGPT to plug into local data or services using a unified format. This is a huge win for MCP, which now seems poised to become the next big interoperability standard—similar to how OpenAI’s API format became the de facto SDK in previous years. 🔗 More on why MCP is winning

These two developments signal that OpenAI is doubling down on usability and integration—not just making smarter models, but making them more connected and creative.

Also in the news

Anthropic Researches are “Tracing Thoughts” in Language Models

Anthropic has published new research exploring how to trace the internal reasoning processes of language models. By analyzing attention patterns, intermediate activations, and token-level contributions, they aim to better understand how models "think" and make decisions. The work is part of Anthropic’s broader goal of making AI systems more interpretable and controllable—key steps toward safer, more trustworthy models.

GitDiagram: Visualize Your Git Projects with One Command

GitDiagram is a newly released open-source tool that automatically generates architecture diagrams for your Git repositories. With just a single command, it scans your codebase and creates a visual overview of project structure, helping you better understand, document, or onboard into any codebase. It's especially useful for teams working on complex, multi-service systems.

OpenAI Launches Free AI Learning Platform

OpenAI has launched the OpenAI Academy, a free educational platform offering online courses and resources to help people learn how to use AI tools effectively. It includes beginner-friendly lessons on prompting, image generation, and coding—with content co-developed by partners like Georgia Tech and Miami Dade College.

Tip of the week

Getting Started with MCP – The New De Facto Standard for Connecting AI to Your Data

With OpenAI now backing the Model Context Protocol (MCP), it’s clear this open standard is quickly becoming the default way to connect language models to tools, files, and live data sources. Whether you're working with Claude, ChatGPT, or another assistant, MCP gives you a unified interface to expose functions, APIs, and local systems—no custom glue code required.

🧠 What’s the Model Context Protocol?

MCP allows AI assistants to query external tools and services through a standardized protocol. You can connect local files, databases, APIs, cloud services—or anything else you expose via an MCP server. The protocol handles authentication, input formatting, and response parsing in a clean, structured way.

🚀 How to Get Started:

🎥 Video Walkthrough: This step-by-step intro video shows you how to install and configure MCP, and includes live demos of real tool integrations. It’s an excellent starting point for developers who want to understand both the “why” and “how” of MCP setup.

📦 Use Pre-Built Servers: Skip the boilerplate. This GitHub repo lists dozens of ready-to-use MCP server implementations—everything from Google Drive to GitHub and local file systems:

🔗 Awesome MCP Servers on GitHub

If you’re building any assistant with tool support, MCP is the fastest way to make your AI actually useful. It's clean, flexible, and quickly becoming the standard for production AI workflows.

Here is the "Awesome MCP Servers” repo which is fully backe with ready-to-use MCP server implementations:

We hope you liked our newsletter and you stay tuned for the next edition. If you need help with your AI tasks and implementations - let us know. We are happy to help