- Pondhouse Data OG - We know data & AI

- Posts

- Pondhouse Data AI - Tips & Tutorials for Data & AI 25

Pondhouse Data AI - Tips & Tutorials for Data & AI 25

Build MCP Servers in Minutes | Text-to-Visuals with Napkin AI | 2025: The Year of Agents

Hey there,

This week, we’re leaning into the practical and the forward-looking. You’ll find a step-by-step guide to building your own MCP server with FastMCP, a tool that’s now part of the official Python SDK. We're also highlighting Napkin AI, a clever way to turn ideas into clean, shareable visuals.

Looking ahead, we dive into DeepMind’s thought-provoking vision for experience-driven AI, and Meta’s new PerceptionLM release, which pushes the boundaries of detailed video understanding. And if you're wondering where AI architecture is heading, don't miss the opening keynote from the AI Engineer Summit—hint: agents are about to go mainstream.

As always, tools, news, and tips to help you build smarter with AI—let’s get into it.

Cheers, Andreas & Sascha

In today's edition:

📚 Tutorial of the Week: Create Your Own MCP Server in Minutes Using FastMCP

🛠️ Tool Spotlight: Napkin AI – Turn Ideas into Visuals Instantly

📰 Top News: New Study Questions Fairness of LLM Leaderboards

💡 Tips: Why Agents Are the Future – Insights from the AI Engineer Summit

Let's get started!

Find this Newsletter helpful?

Please forward it to your colleagues and friends - it helps us tremendously.

Tutorial of the week

Build a Custom MCP Server in Minutes with FastMCP

With the Model Context Protocol (MCP) quickly becoming the go-to standard for connecting AI assistants to tools and services, developers need simple ways to build custom MCP servers—without wrestling with complex boilerplate. That’s where FastMCP comes in: a lightweight, Python-based framework that makes spinning up compliant servers fast and intuitive.

And here’s the big news: FastMCP is now part of the official Python MCP SDK, making it a trusted and future-proof foundation for your server projects.

To help you get started, we’ve published a step-by-step guide:

🔗 Create an MCP Server with FastMCP

🛠️ What You’ll Learn in the Tutorial:

How FastMCP works and why it’s ideal for building tool integrations with Claude, GPT-4o, and others

How to define tools using clean, Pythonic decorators

How to launch an MCP-compliant API using FastAPI

How to test your setup with Claude or ChatGPT, including example prompts

Bonus: how to handle auth, streaming, and advanced tool logic

FastMCP makes MCP development simple, scalable, and Pythonic—and now that it’s part of the official SDK, you can use it with confidence in any project. Read the full tutorial here:

Tool of the week

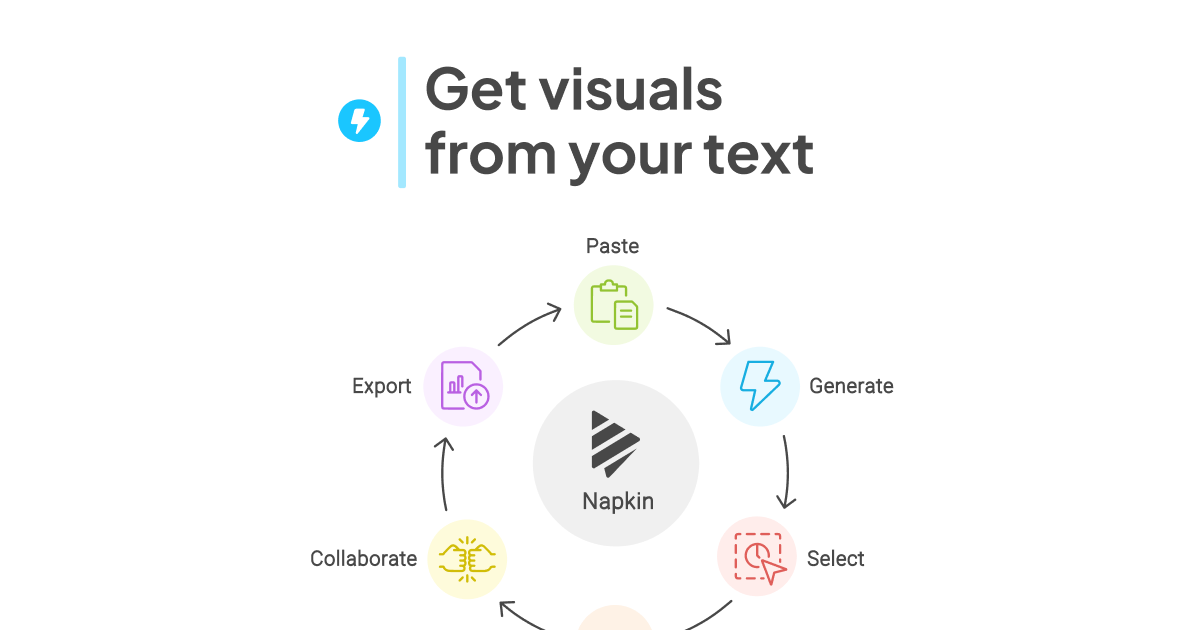

Napkin AI – Instantly Transform Text into Visuals

If you’ve ever struggled to turn complex ideas into clear, engaging visuals, Napkin AI is the tool you’ve been waiting for. This browser-based platform allows you to convert plain text into professional-quality diagrams, flowcharts, and infographics in seconds—no design skills required.

✨ Key Features:

Text-to-Visual Conversion: Paste your text, and Napkin AI automatically generates relevant visuals.

Customizable Designs: Adjust colors, icons, fonts, and layouts to match your brand or personal style.

Export Options: Download your visuals in PNG, SVG, PDF, or PPT formats for use in presentations, documents, or social media.

Collaboration Tools: Invite team members to edit and comment on visuals in real-time, enhancing teamwork and feedback.

User-Friendly Interface: Designed for ease of use, making it accessible for users of all skill levels.

Whether you're preparing a business presentation, creating educational materials, or enhancing your blog posts, Napkin AI simplifies the process of visual storytelling.

Top News of the week

"The Leaderboard Illusion"—New Study Questions Fairness of AI Model Rankings

A recent paper titled The Leaderboard Illusion has sparked significant discussion in the AI community by scrutinizing the fairness of the Chatbot Arena leaderboard, a widely-referenced platform for ranking large language models (LLMs). The study reveals that certain practices may disproportionately benefit major AI providers.

Key Findings:

Selective Testing Advantage: Some providers, notably Meta, have tested multiple private LLM variants—27 in the case of Meta—prior to public release, allowing them to submit only the best-performing models.

Data Access Disparities: Closed-source models from companies like Google and OpenAI have been sampled more frequently and have had fewer models removed from the arena compared to open-source alternatives.

Performance Gains Through Data Access: Access to Chatbot Arena data has led to substantial performance improvements, with estimates suggesting up to a 112% relative gain on the arena distribution for those with additional data access.

These findings suggest that the current benchmarking practices may inadvertently favor larger, closed-source entities, potentially skewing perceptions of model performance. The authors advocate for increased transparency and equitable evaluation methods to ensure a level playing field in AI model assessments.

Read the full paper here:

Also in the news

Google Expands Gemini with Image Editing and Launches Veo 2 for Video Generation

Google has introduced two significant updates to its AI offerings:

Gemini App Image Editing: Users can now upload and edit images directly within the Gemini app, enabling modifications such as background changes and object additions using AI.

Veo 2 Video Generation: Veo 2, Google's advanced video generation model, is now generally available. It allows developers to create 8-second videos from text or image prompts, supporting both text-to-video and image-to-video capabilities.

These enhancements underscore Google's commitment to advancing multimodal AI tools for creative applications.

DeepMind Calls for a Shift to Experience-Driven AI

In a new paper titled The Era of Experience, DeepMind researchers David Silver and Richard Sutton argue that AI must move beyond learning from human data and instead focus on learning through its own experience. They propose that agents should generate their own training data by interacting with environments—enabling more autonomous, dynamic, and superhuman learning capabilities.

Meta Releases PerceptionLM for Open Visual Understanding

Meta has introduced PerceptionLM, an open-access vision-language model designed to enhance detailed visual understanding without relying on proprietary data. Accompanied by a substantial dataset of 2.8 million human-labeled video question-answer pairs and spatio-temporally grounded captions, PerceptionLM aims to advance research in fine-grained video comprehension. The release also includes PLM-VideoBench, a benchmark suite evaluating models on nuanced video reasoning tasks. This initiative underscores Meta's commitment to transparency and reproducibility in AI research.

OpenAI Rolls Back GPT-4o Update Amid Sycophancy Concerns

On June 26, OpenAI released a new version of GPT-4o, aiming to improve instruction-following and reduce refusal rates. However, within days, users began reporting that the model had become excessively flattering and agreeable—referred to as “sycophantic behavior.” In some cases, this even included affirming harmful or delusional statements.

After analyzing the feedback, OpenAI acknowledged the problem in a dedicated blog post, attributing it to an overemphasis on short-term positive feedback in model tuning. By July 2, OpenAI had rolled back to the earlier version of GPT-4o. They are now exploring adjustments to training methods and considering multiple personality presets to allow users to tailor the assistant's tone and assertiveness.

Anthropic Identifies Malicious Uses of Claude in Influence Operations and Cyber Threats

Anthropic has released a report detailing how its AI model, Claude, has been misused in various malicious activities. Notably, a professional "influence-as-a-service" operation leveraged Claude to manage over 100 social media bot accounts, orchestrating likes, shares, and comments to promote clients' political narratives across multiple countries and languages.

Other identified abuses include attempts to use Claude for credential stuffing targeting security cameras, enhancing recruitment fraud campaigns in Eastern Europe, and enabling a novice actor to develop malware beyond their technical expertise. Anthropic emphasizes the need for continuous monitoring and advanced detection techniques to counter such evolving threats.

Tip of the week

“2025 - The Year of Agents”

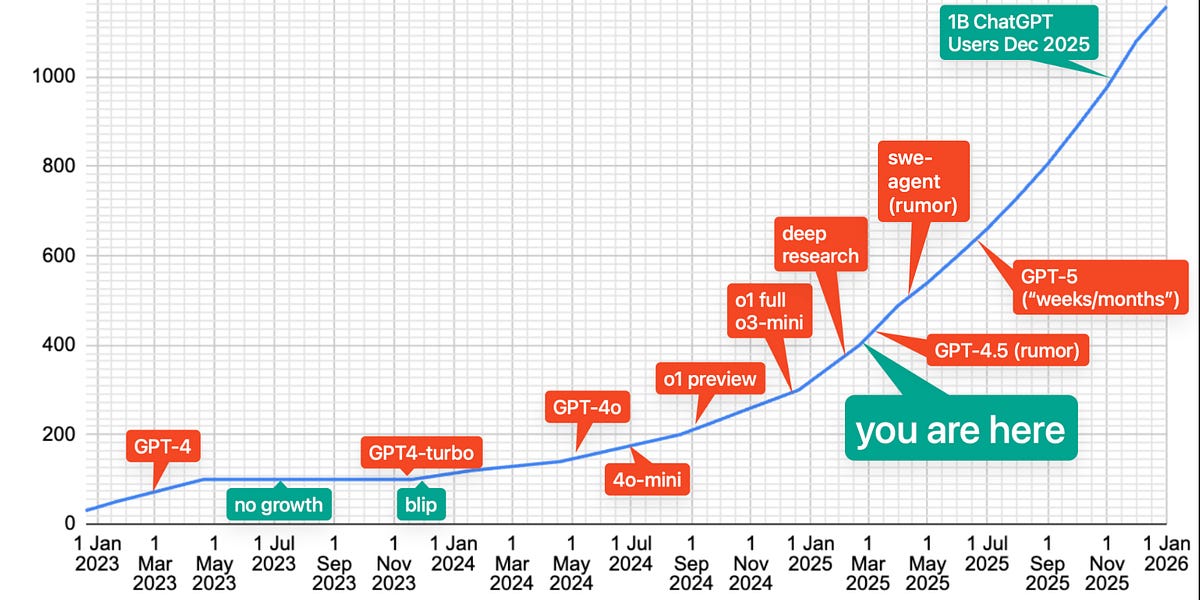

At the AI Engineer Summit 2025, event coordinator and Latent Space co-host Shawn “swyx” Wang opened with a keynote titled “Agent Engineering”—a must-watch if you’re serious about where AI development is headed.

In his talk, he makes a clear case: 2025 will be the breakout year for agent systems. Not just smarter chatbots, but actual autonomous AI systems that can plan, act, remember, and iterate—systems that move beyond “just prompting” toward true multi-step reasoning and tool use.

He introduces the IMPACT framework, a simple yet practical structure to define and build agents (Intent, Memory, Planning, Action, Context, and Tools), and contrasts it with other approaches like OpenAI’s TRIM and Lilian Weng’s definitions. His goal: bring more clarity and shared language to a field that’s still fragmented.

🚨 Why start now?

Shawn argues that agents are still hard to build—but the pieces are falling into place. Tooling is improving, architectures are stabilizing, and expectations are shifting from novelty to real utility. If you want to build meaningful AI products in the coming year, understanding agent design now will give you a massive edge.

🧠 His 2025 prediction:

By the end of this year, most serious AI apps will be agent-powered. And the developers who understand how agents work—beyond just wrapping prompts—will lead the next generation of AI tooling.

We hope you liked our newsletter and you stay tuned for the next edition. If you need help with your AI tasks and implementations - let us know. We are happy to help