- Pondhouse Data OG - We know data & AI

- Posts

- Pondhouse Data AI - Tips & Tutorials for Data & AI 30

Pondhouse Data AI - Tips & Tutorials for Data & AI 30

BigQuery via MCP | Open 1T Model Kimi K2 | Markdown from Anything | Context7 Live Docs

Hey there,

This week, we’re spotlighting real tools that make agents and AI assistants actually useful—from integrating BigQuery into Claude with just a few config lines, to turning any document into clean Markdown for better LLM inputs.

We also take a look at Kimi K2, a new 1T-parameter open model optimized for agent use, and Context7, a tool that keeps your LLMs grounded in the latest library docs.

Enjoy the read!

Cheers, Andreas & Sascha

In today's edition:

📚 Tutorial of the Week: Connect BigQuery to Claude with an MCP Server – full setup guide

🛠️ Tool Spotlight: MarkItDown – Convert anything to clean, LLM-ready Markdown

📰 Top News: Moonshot AI releases Kimi K2 – a 1T open model built for agent workflows

💡 Tips: Context7 – Reliable, up-to-date API docs inside your LLM prompts

Let's get started!

Find this Newsletter helpful?

Please forward it to your colleagues and friends - it helps us tremendously.

Tutorial of the week

MCP Server for BigQuery — Connect Your Data Warehouse to AI Agents

In this week’s tutorial, we guide you through setting up an MCP (Model Context Protocol) server in front of BigQuery, allowing AI agents like Claude to access and query your data warehouse seamlessly. Using Google’s MCP Toolbox for Databases, you can expose BigQuery datasets via secure, standardized tools—no custom APIs required.

🚀 What You’ll Learn:

How to install the MCP Toolbox binary or Docker and authenticate BigQuery access

How to configure

tools.yamlto expose SQL-based tools (e.g.,get_article_information)How to run the MCP server locally and connect it through the MCP Inspector

How to structure tools for dynamic querying: schema inspection and natural-language SQL generation

💡 Why This Matters:

Standardized access to BigQuery via MCP enables AI models to query warehouse data without plumbing

Secure and scalable with OAuth2/OpenID, connection pooling, and observability

Extensible structure—start with a single query tool, then expand into full SQL support

Prototype fast, with production-grade defaults from Google’s Toolbox

If you’ve got a data warehouse and want your LLMs to access it directly, this setup is a fast track to LLM-powered analytics.

Tool of the week

MarkItDown — Convert Anything to Markdown, Ready for LLMs

If you work with mixed document formats and need clean, LLM-ready text, MarkItDown is your go-to tool. This lightweight Python utility converts PDFs, Word docs, PowerPoints, Excel sheets, images, audio, HTML, YouTube captions, EPUBs, and more into structured Markdown that LLMs like Claude and GPT-4o can understand effortlessly.

🚀 What Makes It Shine

Multi-format support: Handles documents (PDF, DOCX, PPTX), audio transcription, images with OCR, HTML, ZIP, EPUB—even YouTube transcripts.

Markdown-first output preserves headings, lists, tables, links—ideal for downstream LLM use.

Built-in MCP server support lets you deploy it as an LLM-accessible service with one command via

markitdown-mcp.

🧰 Quick Start

pip install 'markitdown[all]'

markitdown path/to/file.pdf -o file.md

Or run it as an MCP server for direct integration with Claude Desktop and other agents.

🔧 Latest Upgrades

Plugin architecture, so you can extend support for new file types

Stream-friendly, no temp files—converts in memory

Grouped dependencies, install only what you need (e.g., PDF, audio)

MarkItDown simplifies the “data ingestion” step for LLM apps with broad document support and LLM-ready output. Deploying it as a tool server via MCP turns any document corpus into an agent-accessible knowledge base.

Top News of the week

Meet Kimi K2 – A 1T-Parameter Open Agentic Model

Moonshot AI has released Kimi K2, a 1 trillion‑parameter mixture‑of‑experts language model optimized for agentic use, meaning it excels at tool use, multi‑step tasks, and autonomous problem-solving. With an enormous 128 K token context window and a license under a modified MIT (commercial-friendly), Kimi K2 supports OpenAI-style API endpoints and integrates with inference engines like vLLM and TensorRT-LLM.

🧠 Key Performance Highlights:

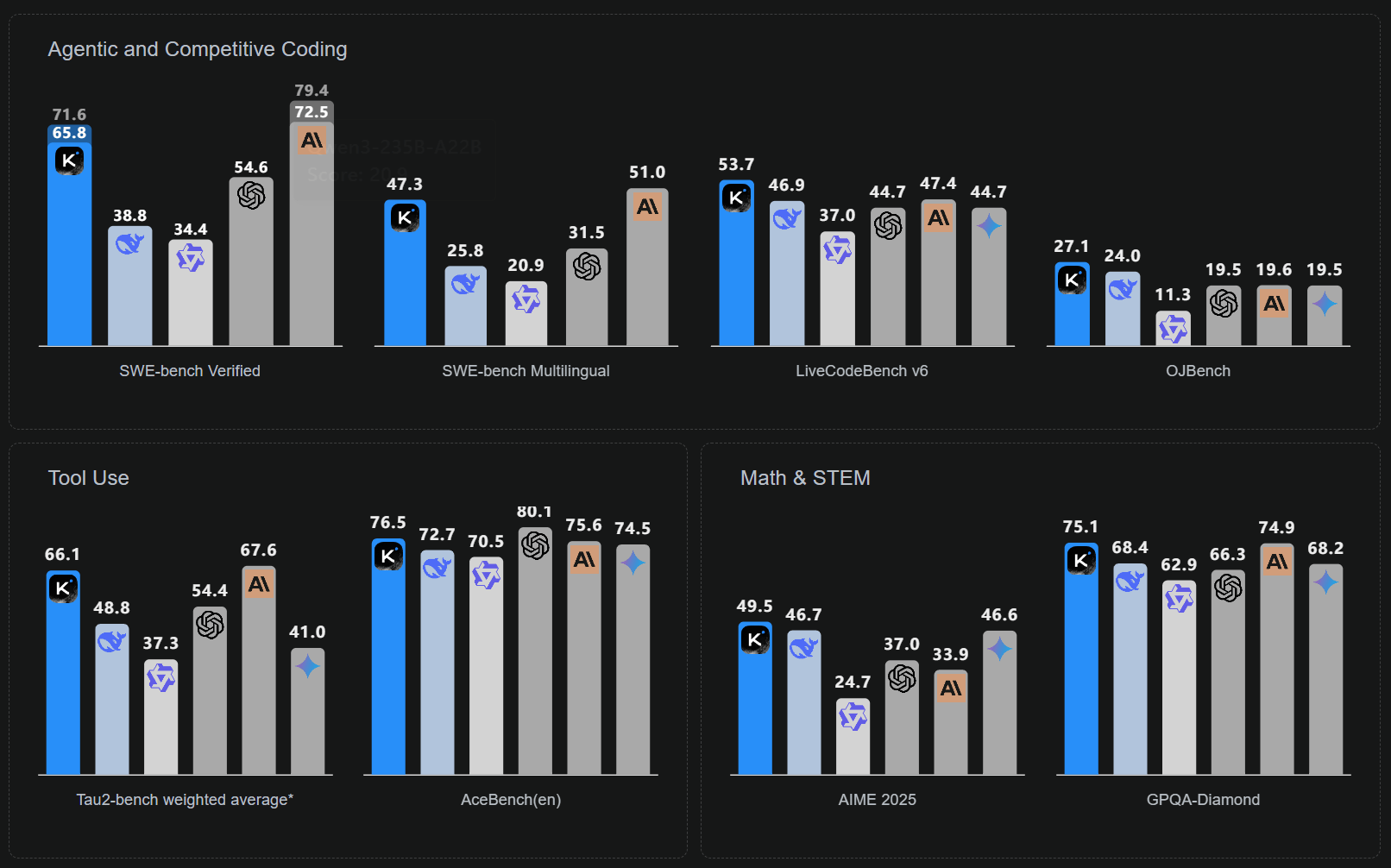

Lead in STEM benchmarks: 97% on MATH‑500, 65.8% on SWE-bench Verified, and solid tool-use results (e.g., 70% on Tau2 and 76% on AceBench).

Agentic demos: performs multi-step workflows like salary analysis and trip planning with tool calls and interactive outputs across web, code, and charts.

Moonshot also introduced the Mu and MuonClip optimizers, enabling a stable training run across 15.5 trillion tokens—including a record 1 T-parameter training—while preventing optimizer collapse.

Why It Matters:

Sets a new bar for open, agent-capable models, rivaling GPT‑4.1 in coding and reasoning.

Ultra-long contexts expand possibilities for document processing and multi-turn agent conversations.

Open and compatibility ready: easy migration for existing OpenAI or Anthropic workflows—ideal for enterprise and research use.

Also in the news

OpenAI Introduces Deep Research API

OpenAI has launched the Deep Research API, enabling GPT to run long, multi-step research tasks with structured memory and modular planning. It’s designed for complex queries that require reasoning, synthesis, and tool use across large content sets.

Hugging Face Shares How They Built Their MCP Server

Hugging Face published a detailed breakdown of their experience building an MCP server for the Hub. They share decisions around transport protocols, streaming modes, and handling session state—along with challenges in client interaction and tool updates. It’s a practical guide for anyone designing agent-ready infrastructure.

Perplexity Launches Comet – an AI-Native Browser

Perplexity has introduced Comet, a browser with built-in AI agents that can search, summarize, interact with websites, and even shop for you. Users can give natural commands like “take control of my browser,” and the agent handles the task across tabs.

OpenAI Launches ChatGPT Agent

OpenAI has released ChatGPT Agent, a multimodal assistant that can browse the web, execute code, interact with apps, and create documents—using a secure virtual computer—on behalf of Pro, Plus, and Team users. It merges capabilities from Operator and Deep Research into a unified agentic workflow. Before making any irreversible actions, it requests permission and allows users to interrupt at any time.

Tip of the week

Context7 – Real-Time Library Docs for Your Agent Workflows

Context7 acts as an MCP server that brings the latest docs and working code samples directly into your prompts, ensuring LLMs don’t hallucinate outdated APIs. Whether you're working with React, Next.js, Upstash, or any npm library, Context7 fetches version-specific snippets straight from the source.

🚀 Why It Matters

LLMs often rely on old training data or invent APIs—Context7 eliminates hallucinations by providing fresh, accurate information.

Ideal for fast-moving libraries—no more wasted time correcting broken code.

🔧 How to Use It

Install via

npx -y @upstash/context7-mcpor use the one-click add in clients like Cursor, Windsurf, VS Code, Eclipse, Claude, etc.In your prompt, simply write

use context7and the tool injects accurate docs on demand.

🧰 Pro Tips

Use prompt rules (e.g., in Cursor or Windsurf) to auto-call Context7 whenever you're asking for code examples or API usage.

Context7 is MIT‑licensed, free for personal use, and supports most LLM clients that follow MCP.

Bottom line: For reliable code output and less debugging of hallucinated APIs, Context7 is a must-have tool in any LLM-powered developer workflow.

We hope you liked our newsletter and you stay tuned for the next edition. If you need help with your AI tasks and implementations - let us know. We are happy to help