- Pondhouse Data OG - We know data & AI

- Posts

- Pondhouse Data AI - Tips & Tutorials for Data & AI 32

Pondhouse Data AI - Tips & Tutorials for Data & AI 32

DeepSeek Beats Claude at 11% Cost | Parse PDFs in One Call | Vercel’s AI Gateway Goes GA | Gemini Market Agent Tutorial

Hey there,

This week’s newsletter is all about smart systems—from a new transformer alternative (MoR) that thinks more efficiently, to a lightweight gateway that makes AI model switching seamless. We’re also digging into PDF analysis with OpenAI, building agents with Gemini and Vercel, and checking out some strong new releases from DeepSeek, Meta, and more.

Plenty of tools, insights, and ideas to level up your AI projects—enjoy the read!

Cheers, Andreas & Sascha

In today's edition:

📚 Tutorial of the Week: Build a Market Research Agent with Gemini and Vercel’s AI SDK

🛠️ Tool Spotlight: Vercel AI Gateway – Unified, Scalable Access to Any Model

📰 Top News: Mixture of Recursions (MoR) – A Smarter, Faster, Leaner Transformer Alternative

💡 Tips: Parse and Analyze PDFs Natively with the OpenAI API

Let's get started!

Find this Newsletter helpful?

Please forward it to your colleagues and friends - it helps us tremendously.

Tutorial of the week

Build a Market Research Agent with Gemini and Vercel’s AI SDK

If there’s anything making agents truly practical, it’s smooth integration with the web. In this week’s tutorial, we explore how to construct a Market Research Agent using Google’s Gemini model powered by the AI SDK from Vercel.

The step-by-step guide walks you through building a Node.js + TypeScript agent that:

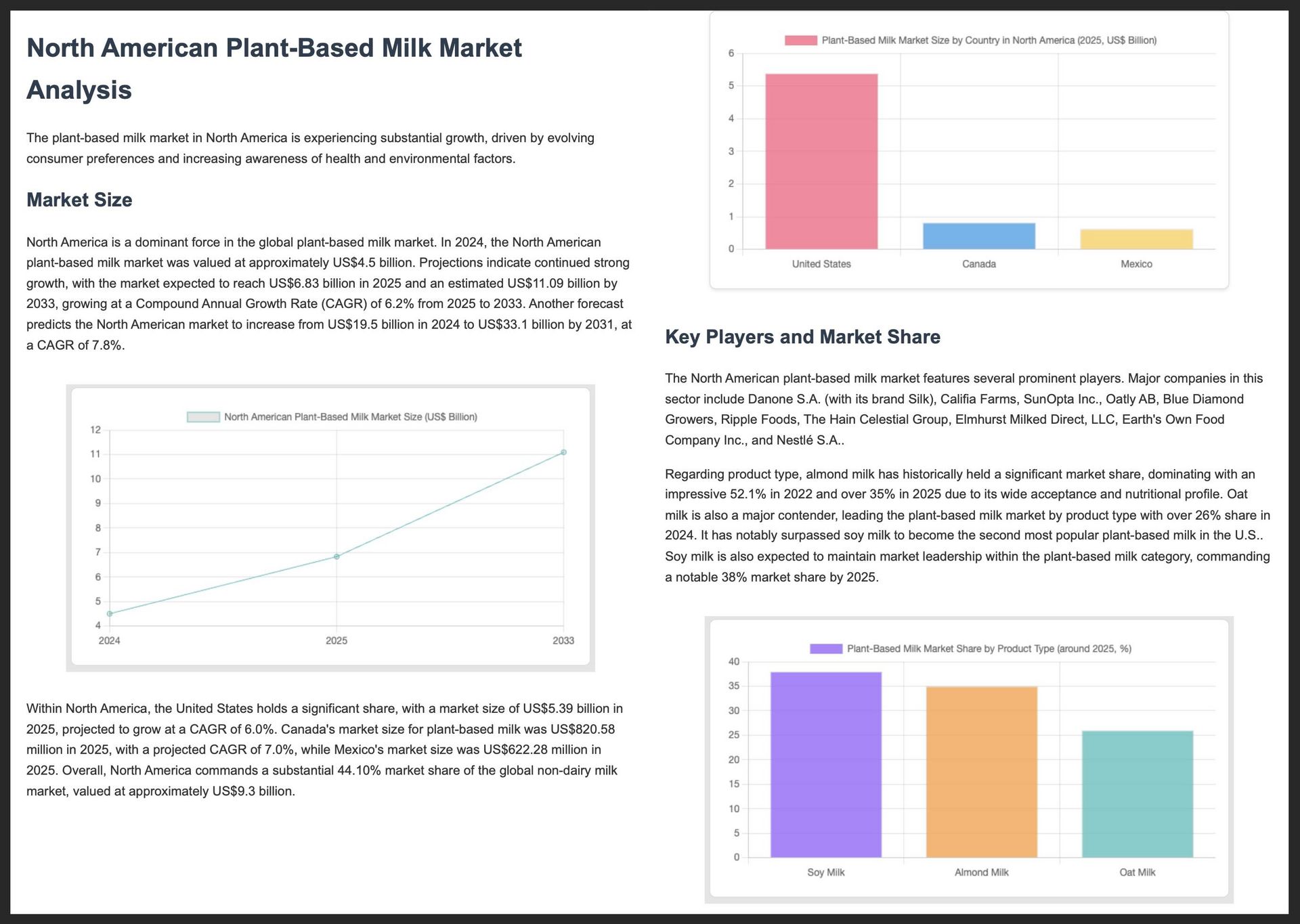

Uses Gemini (via Vercel’s AI SDK + Google Generative AI provider) to search the web for trends like “plant-based milk market size in North America (2024–2025)”.

Extracts structured insights such as key players, market share, and consumer drivers.

Generates visualizations (with Chart.js) and compiles them into a polished HTML report saved as a PDF using Puppeteer.

This tutorial is perfect for developers looking to go beyond simple prompts and build agents that actively research, analyze, and report—all in code that can run locally or be deployed on Vercel. It shows how Gemini can fetch, interpret, and present real-time data in an automated and scalable way.

Feel free to take this sample agent as a baseline and adapt it for your own domains—whether it’s competitive intelligence, industry analysis, or investor dashboards.

Tool of the week

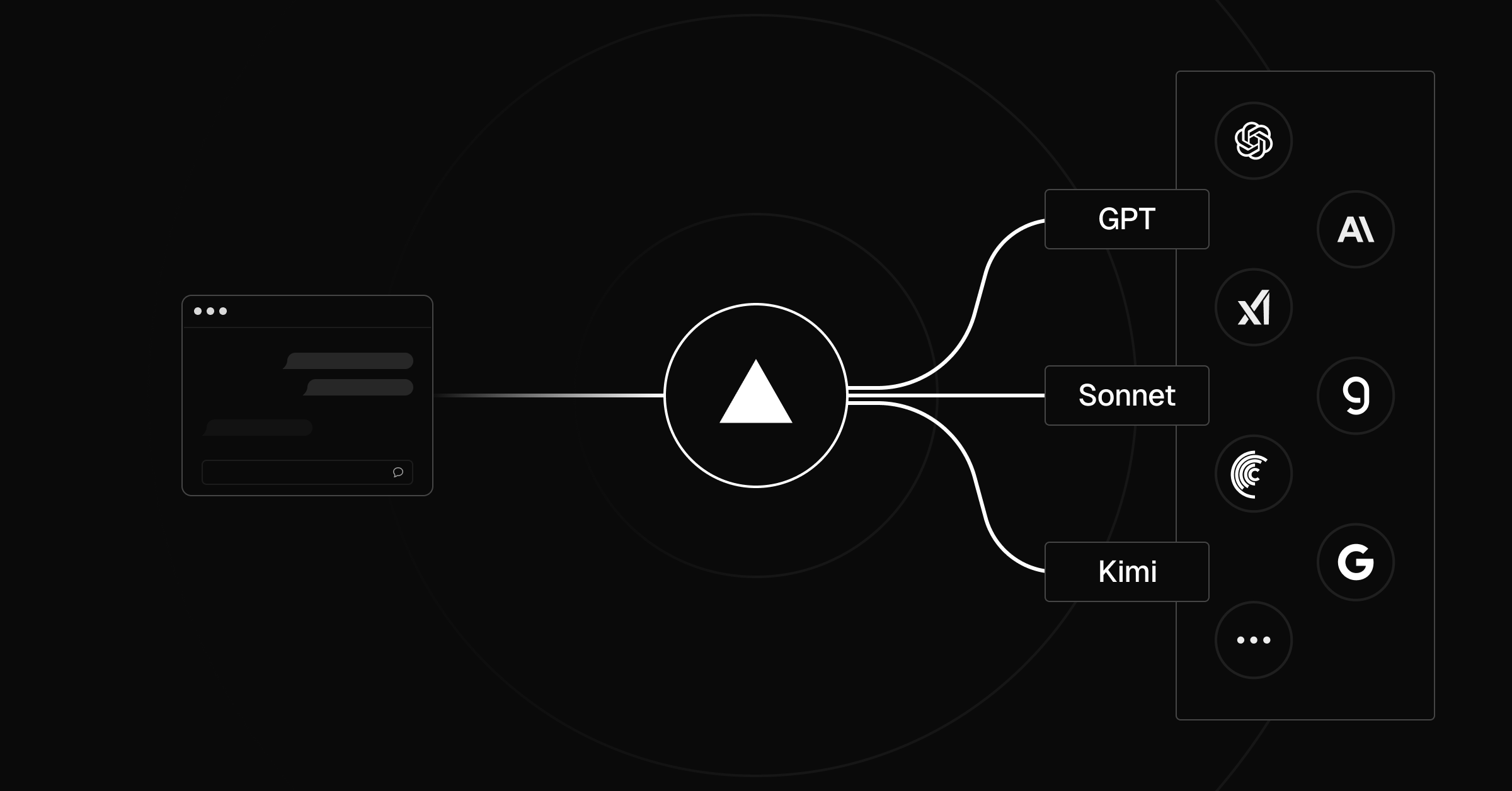

Vercel AI Gateway — Your Unified Access to ANY Model, Anywhere

When it comes to production AI systems, flexibility and reliability are everything. That’s exactly where Vercel AI Gateway shines—it gives you a single API endpoint to access hundreds of models from dozens of providers, without worrying about API keys, rate limits, or vendor lock‑in.

Why the AI Gateway Matters:

Model Flexibility on Demand

Instantly switch providers or model variants with just a one-line code change—no redeploys required. It’s perfect for testing new models or adapting to sudden shifts in performance or pricing.Hyper-Reliability

Built-in load balancing and automatic failover ensure your app stays up even if one model provider falters. No more failed LLM requests during peak usage.Unified Spend and Usage Tracking

Forget juggling dashboards—AI Gateway lets you monitor usage, metrics, and cost across all providers from one place.Ready for Enterprise, Free for Starters

It’s battle-tested infrastructure from the team behind millions of users on v0.app, now in general availability. Every Vercel account comes with $5 in AI Gateway credits each month—a great way to get started.

Whether you're running agent applications, RAG pipelines, search interfaces, or anything in between, AI Gateway gives you a resilient, future-ready foundation that scales with your needs.

Top News of the week

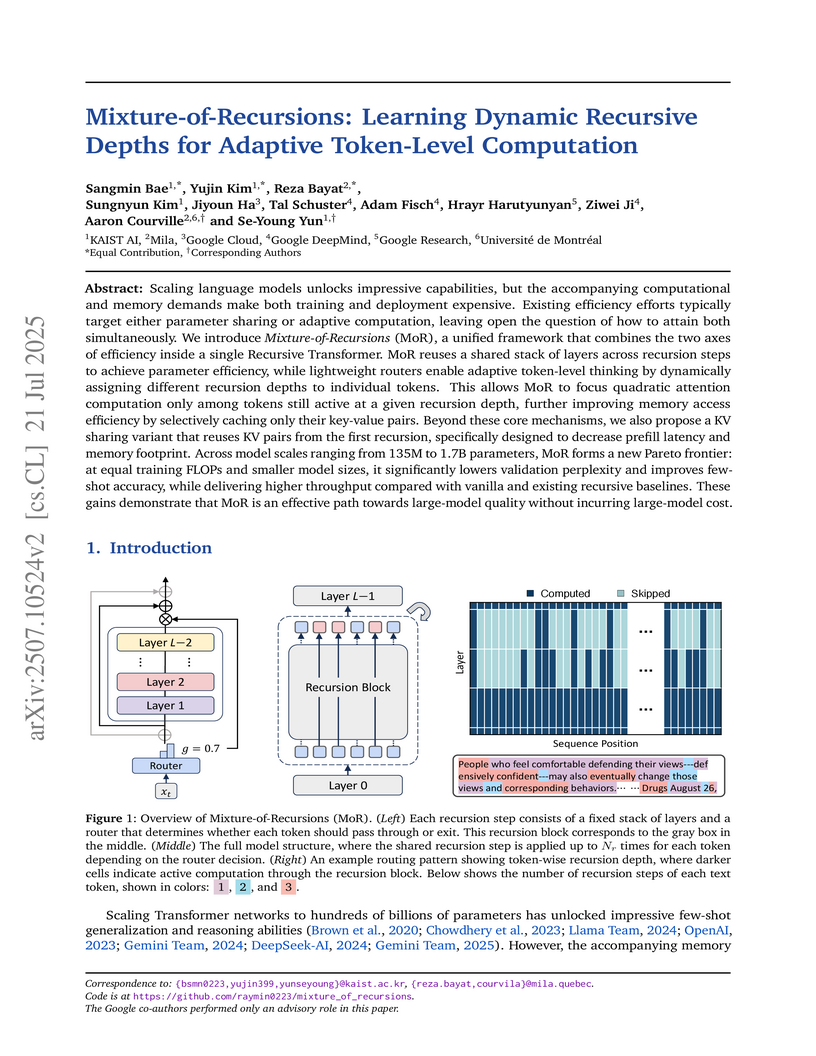

Mixture of Recursion (MoR) —

A Transformer That Thinks Smarter, Faster, and Leaner

Researchers from Google DeepMind, KAIST, and colleagues have introduced Mixture-of-Recursions (MoR)—a new language model architecture that blends parameter sharing with adaptive computation to boost efficiency across the board. Instead of processing every token through all layers, MoR uses a lightweight “router” to decide how much depth each token needs, reusing the same layer block recursively while dynamically applying computation where it counts most.

Why It Matters:

Efficiency Wins: MoR cuts parameter count almost in half and significantly reduces KV-cache memory usage, all while improving inference speed—sometimes doubling throughput compared to standard Transformers.

Performance Gains: Even with fewer resources, MoR matches or exceeds the few-shot and validation performance of larger, more compute-heavy models.

Smarter Workload Routing: By allocating more “thinking depth” to complex tokens and less to trivial ones, MoR ensures compute is spent wisely.

This development is being hailed as a potential “Transformer alternative”—offering a more practical route to powerful, large-language model capabilities without the massive hardware needs.

Explore the full research details:

🔗 Read the MoR Paper on alphaXiv/arXiv

Also in the news

DeepSeek V3.1 Arrives—Powerful Open-Source Model at a Fraction of the Cost

DeepSeek has released V3.1, showing competitive performance with top proprietary models like Claude 4 Sonnet—while costing just ~11% as much. Benchmarks highlight strong coding and reasoning performance, making it a serious open-source alternative for developers focused on value and scale.

The Illustrated GPT‑OSS – OpenAI’s First Open LLM in Years

Jay Alammar’s “Illustrated GPT‑OSS” breaks down OpenAI’s new GPT‑OSS model—their first open-sourced LLM since GPT‑2. While GPT‑OSS isn’t a massive leap ahead of peers like DeepSeek, Qwen, or Kimi, it’s still a noteworthy addition that brings valuable capabilities like tool use, reasoning, and coding. The detailed visual guide offers rich architectural insights and a clear overview of how modern LLMs are evolving.

Qwen‑Image‑Edit—AI-Powered Image Editing That Understands What You're Trying to Do

Alibaba introduces Qwen‑Image‑Edit, a cutting-edge open-source image editor built on their 20B‑parameter Qwen‑Image model. It offers both semantic editing (e.g., style transfer, object rotation, character consistency) and appearance editing (fine-tuned object insertion/removal while preserving surrounding details). The model also delivers precise text editing in both English and Chinese, maintaining original font and layout—making it ideal for creating or refining graphics, posters, and multilingual content.

Despite its size, Qwen‑Image‑Edit is fully Apache 2.0 licensed, meaning it’s free for both commercial and personal use.

Google Releases Gemma 3 270M—An Ultra-Efficient AI for Edge Devices

Google's new Gemma 3 270M is a tiny, 270M-parameter model built for fast, private, on-device inference. It runs efficiently even on phones and browsers. Despite its size, it performs well in instruction-following tasks and is openly available via Hugging Face and Ollama.

Meta Releases DINOv3 – Vision Model Trained Without Labels

Meta has introduced DINOv3, a massive self-supervised vision model trained on 1.7 billion unlabeled images. Despite using no human annotations, it achieves state-of-the-art results in tasks like object detection and segmentation. It’s already being used by organizations like NASA and the World Resources Institute.

Tip of the week

Parse and Analyze PDFs Natively with OpenAI’s API

The OpenAI API now supports direct PDF file input, making it incredibly easy to analyze documents without conversion hurdles. Models like GPT‑4o, GPT‑4o-mini, and o1 with vision capabilities can process PDFs by extracting both text and visible elements—diagrams, charts, and images—in a single request.

Why this changes the game:

Seamless PDF handling — No need for OCR or external parsing; just upload a PDF or include it as Base64 and let the model interpret both content and context.

Rich, structured analysis — Use GPT to summarize content, extract data, or answer questions directly from a PDF, even when key insights are embedded in visuals.

Flexible input options — PDFs can be uploaded via the Files API (yielding a file ID) or sent directly as Base64-encoded strings—perfect for dynamic workflows.

What you can do with it:

Analyze research papers for key findings and figures

Turn reports into bullet-point summaries with context retention

Extract structured data from documents with both text and visuals

This feature streamlines document workflows—no pre-processing required, just smart, direct analysis.

We hope you liked our newsletter and you stay tuned for the next edition. If you need help with your AI tasks and implementations - let us know. We are happy to help