- Pondhouse Data OG - We know data & AI

- Posts

- Pondhouse Data AI - Tips & Tutorials for Data & AI 34

Pondhouse Data AI - Tips & Tutorials for Data & AI 34

Deterministic LLMs Are Here | Microsoft's AI Course | Claude Creates Office Documents | Mistral Raises 1.7B€

Hey there,

This week’s edition is packed with breakthroughs and practical tools. From Microsoft’s beginner-friendly AI curriculum to a powerful workflow framework in Sim, we're diving into what’s shaping AI at every level. Google is optimizing inference with smarter decoding, Claude now executes code, and Mistral is putting Europe firmly on the AI map with fresh funding. Plus, Thinking Machines tackles one of the most frustrating problems in LLMs: non-determinism.

Lots to explore—enjoy the read!

Cheers, Andreas & Sascha

In today's edition:

📚 Tutorial of the Week: Microsoft’s AI-for-Beginners – A Full Curriculum in One GitHub Repo

🛠️ Tool Spotlight: Sim – Build and Deploy Agent Workflows Without Vendor Lock-In

📰 Top News: Thinking Machines Lab Solves the “Same Prompt, Different Output” Problem

💡 Tips: Build MCP Tools for User Goals, Not Just APIs – Vercel’s Second-Wave Playbook

Let's get started!

Find this Newsletter helpful?

Please forward it to your colleagues and friends - it helps us tremendously.

Tutorial of the week

Microsoft’s “AI‑For‑Beginners” — A Full Curriculum in One Repo

If you’re new to AI or looking to sharpen your foundations, Microsoft’s AI‑For‑Beginners GitHub curriculum is a fantastic resource. It offers a 12‑week, 24‑lesson course with labs, quizzes, and notebooks—designed to cover everything from symbolic AI to deep learning, vision, text models, and ethics.

What You’ll Learn

Basic AI history and symbolic traditions, then moving through neural networks, convolutional vision models, GANs, and text embeddings.

Hands‑on labs in both TensorFlow and PyTorch, so you build intuition, not only theory.

Ethics and responsible AI, multi‑agent systems, and less‑common approaches like genetic algorithms.

This repo is great for beginners, students, or anyone who wants a structured learning path that doesn’t assume prior depth. It’s free, open source (MIT‑licensed), and available in dozens of languages.

Tool of the week

Sim — Build & Deploy Agent Workflows Fast & Open

Sim by SimStudioAI is an open‑source platform designed to help you build, deploy, and manage AI agent workflows easily. It’s low‑code/no‑code friendly, supports self‑hosting, multiple models, tools integrations, and offers flexibility in how you run your agents.

🔍 Why Sim is worth checking out

Flexible deployment models: You can run Sim in the cloud (via sim.ai) or self‑host it using Docker containers, dev containers, or a local setup with Ollama.

Supports many LLM tools and embedding systems: Works with local or remote AI models, uses pgvector (PostgreSQL extension) for embeddings, and lets you add knowledge bases or semantic search.

Workflow & agent orchestration: Sim provides a UI for stitching together tools, agents, and actions. You can define triggers, integrate APIs, or use prompt‑based tools in your workflows.

Open license & community traction: Licensed under Apache‑2.0, with over 15k stars on GitHub. It’s getting attention fast in the AI tooling ecosystem.

⚒️ Where it really shines / Use cases

Teams that want to spin up agents quickly without tight dependence on a single vendor.

Projects needing embeddings, semantic search, or knowledge base lookups built into their agents.

Scenarios where you want local control (self‑hosted) or want to avoid latency / cost overhead of constant cloud API usage.

Top News of the week

LLMs That Don’t Change Their Mind — Solving AI’s Repeatability Problem

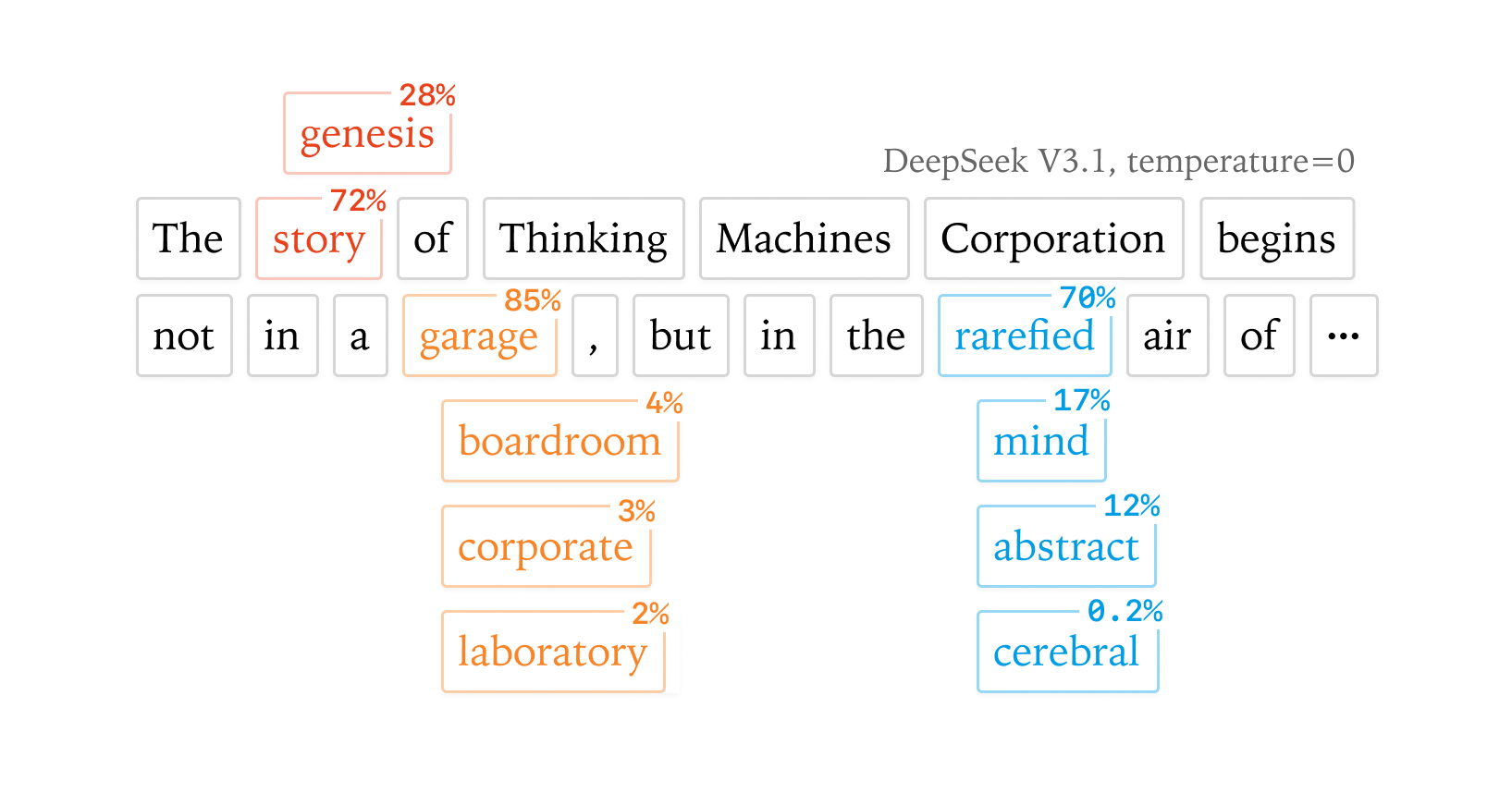

You’d think setting temperature=0 would give you the same output every time. But as many devs know, it doesn’t—until now. In a new deep-dive from Thinking Machines, the AI lab founded by former OpenAI CTO Horace He, they explain why LLMs still behave unpredictably—and how to make them fully deterministic.

The root cause? Not randomness, but subtle issues in floating-point math, batch-size variance, and non-associative GPU kernels (yes, really). Their fix: a modified inference engine using batch-invariant kernels that forces the model to produce bit-exact outputs—even across thousands of completions.

🧠 Why it matters:

✅ Deterministic output improves testability, debugging, and reproducibility

🧩 Great for inference caching, audit trails, and RL fine-tuning

📦 Works with real models like Qwen and LLaMA—open-sourced in their repo

This could be a major step forward in production-grade AI, where “close enough” isn't always good enough.

Also in the news

Google Boosts LLM Accuracy with SLED Decoding

Google researchers introduced SLED, a decoding method that taps into all model layers—not just the final one—to improve factual accuracy. It delivers up to 16% better results on benchmarks with only a small compute trade-off.

🔗 Read the full blog post

Claude Gets File Creation and Code Execution

Anthropic has added code execution and file creation to Claude, allowing it to run code, generate files, and share them with users directly inside chats. This brings it closer to the capabilities of ChatGPT and Gemini, especially for tasks like data analysis, scripting, and plotting.

🔗 Read the announcement

Mistral Raises €1.7B in Series C to Power Europe’s AI Ambitions

Mistral has closed a massive €1.7 billion Series C round, pushing its valuation to €11.7B and further solidifying its position as Europe’s AI flagship. The round was led by ASML, with participation from investors like Nvidia, Samsung, and Salesforce. The funding will accelerate development of open-weight models and scale infrastructure for real-world AI deployment.

🔗 Read the full announcement

Oracle Stock Surges on AI and OpenAI Momentum

Oracle shares jumped after reports revealed deeper integration with OpenAI and Microsoft for cloud and AI workloads. The company is now seen as a key backend player in the growing AI infrastructure race, further boosted by strong quarterly cloud revenue.

🔗 Read the article

Is In-Context Learning Actually Learning?

A new paper questions whether in-context learning (ICL) in LLMs involves real learning or just pattern matching. The authors find that while models can generalize in some settings, they often rely on shallow heuristics, especially with limited examples. It challenges assumptions behind few-shot prompting and suggests ICL may not equal “learning” in the traditional sense.

🔗 Read the paper

Tip of the week

Build MCP Tools for User Goals, Not Just APIs

Vercel just published “The Second Wave of MCP: Building for LLMs, Not Developers,” a guide on taking Model Context Protocol (MCP) tools beyond thin API wrappers toward tools that serve complete workflows as users actually expect.

🚀 What You’ll Learn:

Why exposing many small API-wrapped tools forces an LLM to reinvent workflows every time—and how that leads to inconsistent behavior and wasted compute.

How to design tools around user intentions—for example, a single

deploy_project(...)tool that handles creating repos, setting up domains, env vars, etc., rather than making the LLM call several separate APIs.Advice on combining multiple low-level operations into a single MCP tool that manages orchestration, error recovery, and conversational status updates.

Guidelines for testing workflow-shaped tools with real user requests, iterating based on where LLMs ask for clarifications or fail.

⚡ Why This Matters Now:

MCP is becoming standard not just because of API compatibility, but because agents powered by MCP are better when their tools match what users want done, not just what endpoints exist. Designing tools this way boosts reliability, reduces confusion, and makes agents feel more dependable.

We hope you liked our newsletter and you stay tuned for the next edition. If you need help with your AI tasks and implementations - let us know. We are happy to help