- Pondhouse Data OG - We know data & AI

- Posts

- Pondhouse Data AI - Tips & Tutorials for Data & AI 37

Pondhouse Data AI - Tips & Tutorials for Data & AI 37

Frontier AI Model 'Personalities' Revealed | OpenAI Safety Models | VISTA Self-Improving Video Generation | Transformers Visual Guide

Hey there,

This week’s edition is packed with insights and breakthroughs from the world of AI and data. We’re demystifying transformer architectures with a must-read visual guide, spotlighting Tinker, the flexible API that’s changing the game for efficient LLM fine-tuning, and unpacking Anthropic’s new study on the hidden personalities and value conflicts in leading AI models like Claude, GPT-4, and Gemini. Plus, we’ll explore creative prompting with Verbalized Sampling and highlight fresh research on brain-inspired agents and self-improving video generation.

Let’s dive in!

Cheers, Andreas & Sascha

In today's edition:

📚 Tutorial of the Week: Visual guide to transformer architectures

🛠️ Tool Spotlight: Tinker API for efficient LLM fine-tuning

📰 Top News: Anthropic study on AI model personalities

💡 Tip: Boost LLM creativity with Verbalized Sampling

Let's get started!

Tutorial of the week

Transformers Demystified: A Visual, Intuitive Guide

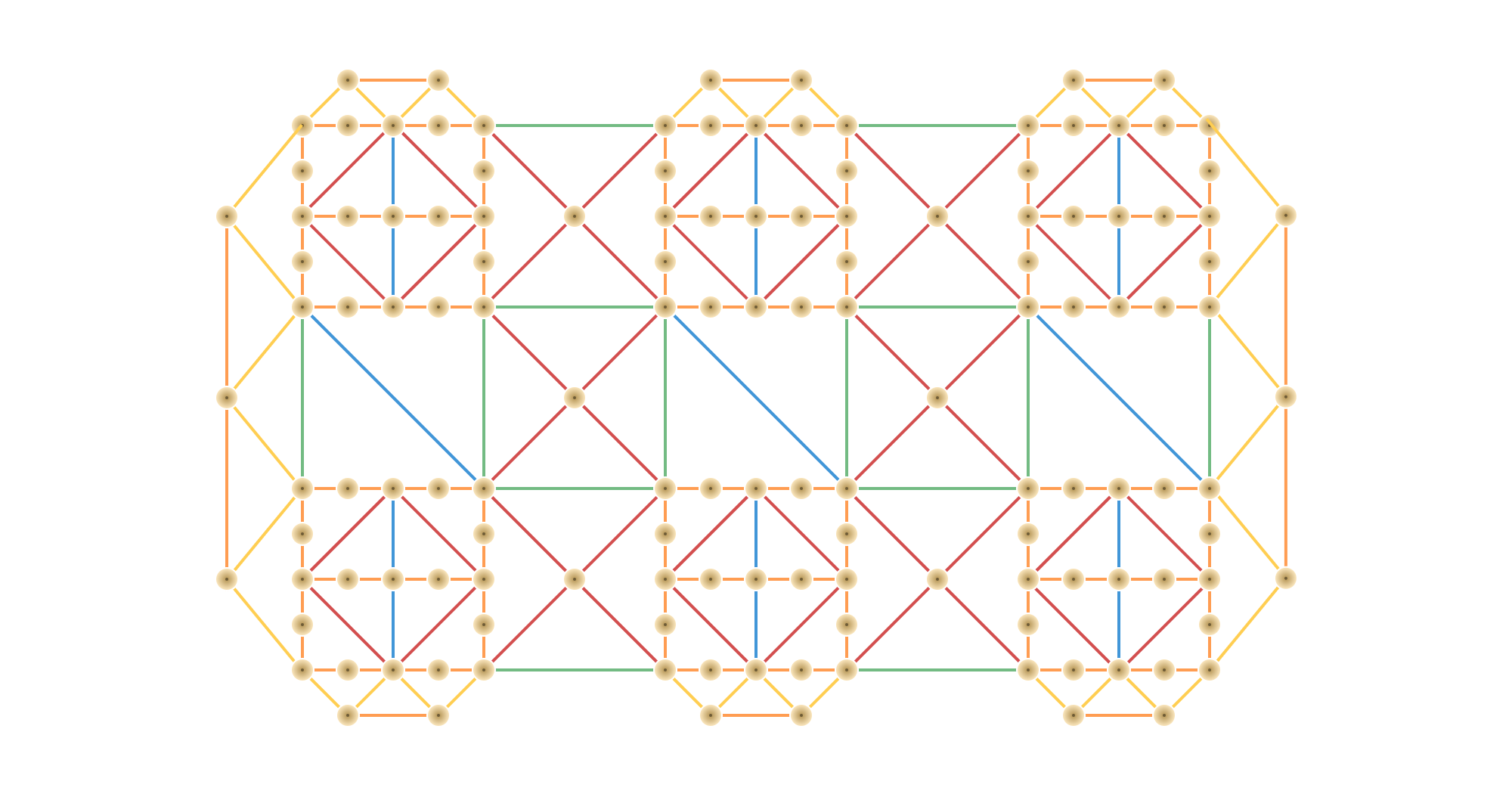

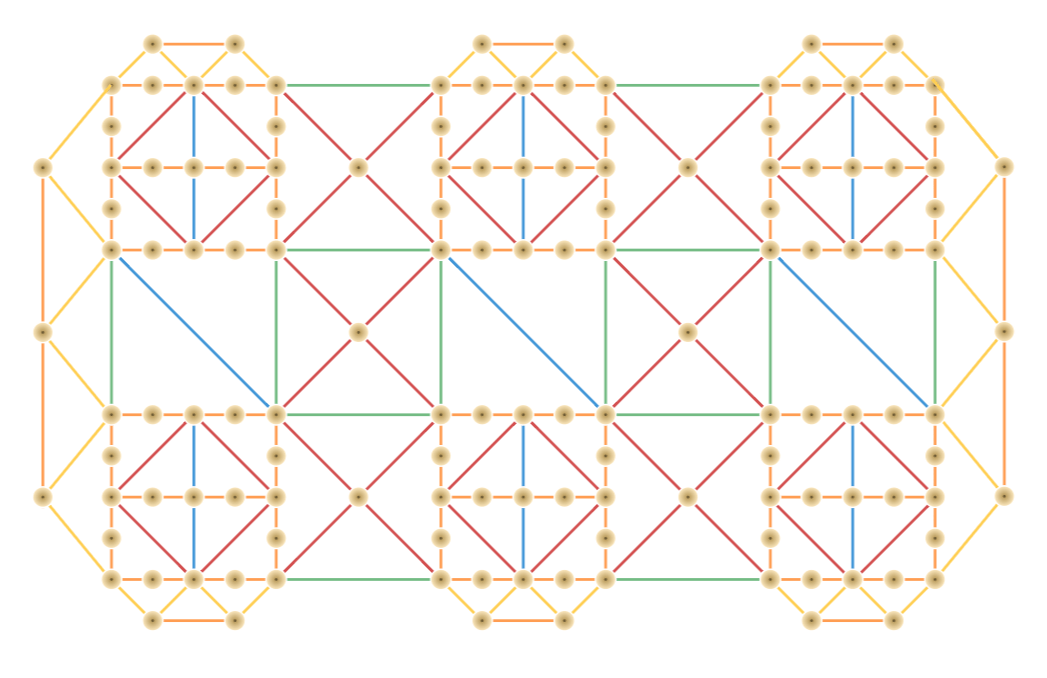

If you’ve ever felt overwhelmed by the complexity of transformer architectures or struggled to grasp how attention mechanisms power today’s large language models, this illustrated guide is for you. “Everything About Transformers” offers a clear, visual journey from the origins of language models to the inner workings of modern transformers like GPT.

Comprehensive, visual storytelling: The guide traces the evolution from early neural networks and RNNs to transformers, explaining why each innovation was necessary—with hand-drawn diagrams at every step.

Step-by-step breakdowns: Learn how attention, positional encoding, encoder/decoder stacks, feed-forward networks, and residual connections all fit together, with intuitive analogies and practical examples.

Focus on fundamentals: Demystifies core concepts like self-attention, multi-head attention, masking, and cross-attention, making them accessible even if you’re new to the field.

Practical relevance: Builds a strong foundation for understanding advanced topics like FlashAttention, Mixture-of-Experts, and the latest LLM innovations.

Ideal for technical professionals: Whether you’re a data scientist, ML engineer, or AI enthusiast, this resource will help you confidently read papers, build models, or simply understand the tech behind today’s AI breakthroughs.

If you want to truly “see” how transformers work and why they matter, this is the guide to bookmark.

Tool of the week

Tinker — Flexible API for Efficient Language Model Fine-Tuning

Tinker, developed by Thinking Machines Lab, is a managed API that streamlines the fine-tuning of large language models (LLMs) using parameter-efficient methods like LoRA. Designed for researchers and developers, Tinker removes the complexity of distributed training and infrastructure management, making it easy to experiment with and customize state-of-the-art models for specialized tasks.

Seamless fine-tuning: Supports a wide range of open-weight models, from compact architectures to massive mixture-of-experts (MoE) models like Qwen-235B-A22B. Switching models is as simple as changing a string in your code.

Parameter-efficient adaptation: Leverages LoRA adapters to achieve fine-tuning quality comparable to full model retraining, while drastically reducing memory and compute requirements. This enables multi-tenant serving and rapid iteration.

Evidence-backed performance: In their recent blog post, the Tinker team demonstrates—through detailed experiments—that LoRA-based fine-tuning can match the quality of full model fine-tuning across a variety of tasks. This supports the growing consensus that parameter-efficient methods are production-ready for many real-world applications.

Low-level API control: Exposes primitives like

forward_backwardandsample, allowing advanced users to implement custom post-training methods.Managed infrastructure: Handles scheduling, resource allocation, and failure recovery on internal clusters, so users can focus on research rather than DevOps.

Open-source recipes: The Tinker Cookbook provides modern, ready-to-use implementations of common post-training workflows.

Tinker is already in use at Princeton, Stanford, Berkeley, and Redwood Research for applications ranging from mathematical theorem proving to reinforcement learning. Currently in private beta, Tinker is free to start, with usage-based pricing planned.

Top News of the week

New Anthropic Study Reveals Hidden Value Conflicts and Personalities in Leading AI Models

A groundbreaking study from Anthropic and Thinking Machines has unveiled how frontier language models like Claude, OpenAI’s GPT-4, Google’s Gemini, and xAI’s Grok exhibit distinct “personalities” and value preferences when faced with complex ethical tradeoffs. By stress-testing over 300,000 scenarios that force models to choose between competing principles—such as safety vs. helpfulness or ethics vs. efficiency—the research exposes deep-seated behavioral divergences and specification gaps in today’s most advanced AI systems.

The methodology systematically generated nuanced dilemmas and analyzed responses from twelve leading models. Key findings include: (1) high inter-model disagreement strongly predicts specification violations, with OpenAI models showing 5–13× more frequent non-compliance in ambiguous scenarios; (2) specifications often lack the granularity to distinguish optimal from merely acceptable responses; and (3) models interpret principles differently, leading to moderate agreement even among AI evaluators. Notably, Claude models consistently prioritize ethical responsibility, OpenAI models favor efficiency, Gemini emphasizes emotional connection, and Grok displays risk-taking behavior. The study also highlights false-positive refusals and outlier behaviors—such as over-conservative safety blocks or political bias—underscoring the need for clearer, more actionable model specs.

These insights are critical for AI developers and users alike, offering a scalable diagnostic tool for improving model alignment and safety. As AI systems are increasingly deployed in sensitive and high-stakes environments, understanding and refining their underlying value frameworks is essential for trustworthy and reliable operation.

Also in the news

OpenAI Launches Flexible Credits for Codex and Sora

OpenAI has introduced a new credit system for ChatGPT users, enabling pay-as-you-go access to Codex and Sora beyond standard plan limits. Credits can be purchased as needed and are valid for 12 months, offering flexibility for developers and researchers who require more extensive usage without upgrading their subscription. The credits are non-transferable but can be used interchangeably between Codex and Sora features, supporting a range of advanced AI tasks such as code review and video generation.

Memory Folding Powers DeepAgent’s Brain-Like Reasoning

A new research paper introduces DeepAgent, an end-to-end reasoning agent that autonomously discovers and uses tools for complex tasks. Central to DeepAgent is "memory folding," a brain-inspired mechanism that compresses interaction history into structured episodic, working, and tool memories. This approach reduces error accumulation and enables smaller models to perform on par with larger ones. Extensive benchmarks show DeepAgent outperforms traditional workflow-based agents, especially in open-set scenarios requiring dynamic tool retrieval and long-horizon reasoning.

Google and NUS Unveil VISTA: Self-Improving Video Generation Agent

Researchers from Google and the National University of Singapore have released VISTA, a multi-agent system that iteratively refines video prompts to enhance text-to-video generation. VISTA decomposes user prompts, generates candidate videos, and uses specialized agents to critique visual, audio, and contextual quality. The system then rewrites prompts for subsequent generations, yielding up to a 60% improvement over state-of-the-art baselines. Human evaluators preferred VISTA’s outputs in two-thirds of cases, highlighting its potential for more reliable, user-aligned video synthesis.

OpenAI Releases Open-Weight Safety Models for Custom Policy Enforcement

OpenAI has announced gpt-oss-safeguard, a pair of open-weight reasoning models (120B and 20B parameters) designed for flexible safety classification. Unlike traditional classifiers, these models interpret developer-defined safety policies at inference time, providing explainable chain-of-thought outputs. This enables organizations to rapidly adapt to emerging risks and enforce nuanced, domain-specific policies without retraining. The models are available under an Apache 2.0 license and can be freely downloaded and integrated into safety pipelines.

Tip of the week

Unlock More Creative AI Outputs with Verbalized Sampling

Ever notice your favorite LLM giving you the same predictable, "safe" answers—especially when you want something creative, like a joke, story, or brainstorming ideas? This tendency toward repetitive or conservative responses is often due to a phenomenon called mode collapse. Mode collapse happens when, after alignment and post-training, models become overly focused on the most likely or "safe" outputs, reducing the diversity and creativity of their responses.

Why does this happen?

During post-training and alignment, large language models are fine-tuned using human feedback to make their outputs more helpful, harmless, and truthful. However, human reviewers tend to prefer responses that are safe, reliable, and familiar. Over time, this introduces a bias toward "safe" answers, causing the model to ignore less common or more creative possibilities—even when those are desirable.

What’s the fix?

Stanford researchers introduced a simple, training-free prompting method called Verbalized Sampling. Instead of asking for a single answer, you prompt the model to generate multiple responses and assign a probability to each one.

Verbalised Sampling prompt from the study:

“System prompt: You are a helpful assistant. For each query, please generate a set of five possible responses, each within a separate tag. Responses should each include a and a numeric . Please sample at random from the [full distribution / tails of the distribution, such that the probability of each response is less than 0.10].

User prompt: Write a short story about a bear.”

Why does it work?

Verbalized Sampling encourages the model to tap into a broader range of its knowledge by prompting it to generate several possible answers and estimate their likelihoods. This approach helps the model move beyond its default "safe" outputs and express more diverse and creative responses—even after alignment. Experiments show that this method can double creative diversity without sacrificing quality or factual accuracy.

When to use it:

Ideal for creative writing, brainstorming, open-ended Q&A, synthetic data generation, or anytime you want less "boring" and more varied outputs from your LLM.

Try this method the next time you want your AI to break out of its rut!

We hope you liked our newsletter and you stay tuned for the next edition. If you need help with your AI tasks and implementations - let us know. We are happy to help