- Pondhouse Data OG - We know data & AI

- Posts

- Pondhouse Data AI - Tips & Tutorials for Data & AI 41

Pondhouse Data AI - Tips & Tutorials for Data & AI 41

Qwen’s Image Layer Decomposition Model | Mobile Agents with FunctionGemma | Mistral OCR 3 Update | New Text-to-Motion Model | Qwen-Image-2512 The Best Open-Source Image Model

Hey there,

This week’s edition is packed with breakthroughs in AI model evaluation, agent extensibility, and creative tooling. We spotlight Anthropic’s Bloom, a new open-source framework that’s transforming automated model safety assessments, and take a hands-on look at Agent Skills, the universal standard for packaging and sharing AI agent capabilities. For those building smarter edge solutions, we share practical tips on fine-tuning Google’s FunctionGemma for on-device function calling. Plus, discover Qwen-Image-Layered, Alibaba’s innovative model for non-destructive, layer-based image editing. Let’s dive in and explore how these advancements are shaping the future of AI and data technology!

Enjoy the read!

Cheers, Andreas & Sascha

In today's edition:

📚 Tutorial of the Week: Anthropic Agent Skills for smarter agents

🛠️ Tool Spotlight: Qwen-Image-Layered open-source image editing

📰 Top News: Anthropic launches Bloom model evaluation framework

💡 Tip: FunctionGemma fine-tuning for on-device agents

Let's get started!

Tutorial of the week

Supercharge Your AI Agents with Agent Skills

Looking to make your AI agents smarter, more adaptable, and easier to extend? Anthropic’s open-source Agent Skills standard offers a universal, open format for packaging and sharing agent capabilities. This resource is a must for anyone building or deploying AI agents who wants to leverage reusable, portable skills across different platforms.

Comprehensive documentation: Learn what Agent Skills are, how they work, and why they’re a game-changer for agent interoperability and extensibility.

Step-by-step integration guides: Detailed instructions for adding skills support to your own agents or tools, with clear examples and best practices.

Reusable, version-controlled skills: Package domain expertise, workflows, and procedural knowledge into portable folders that agents can load on demand.

Open ecosystem: Developed by Anthropic and now open to community contributions, Agent Skills are already supported by leading AI tools and products.

Practical examples and reference library: Browse real-world skill examples and access a reference library to validate and generate your own skills.

Whether you’re an AI developer, product manager, or enterprise team looking to capture and share organizational knowledge, Agent Skills is your gateway to building more capable and collaborative agents. Dive in to start extending your agents today!

Tool of the week

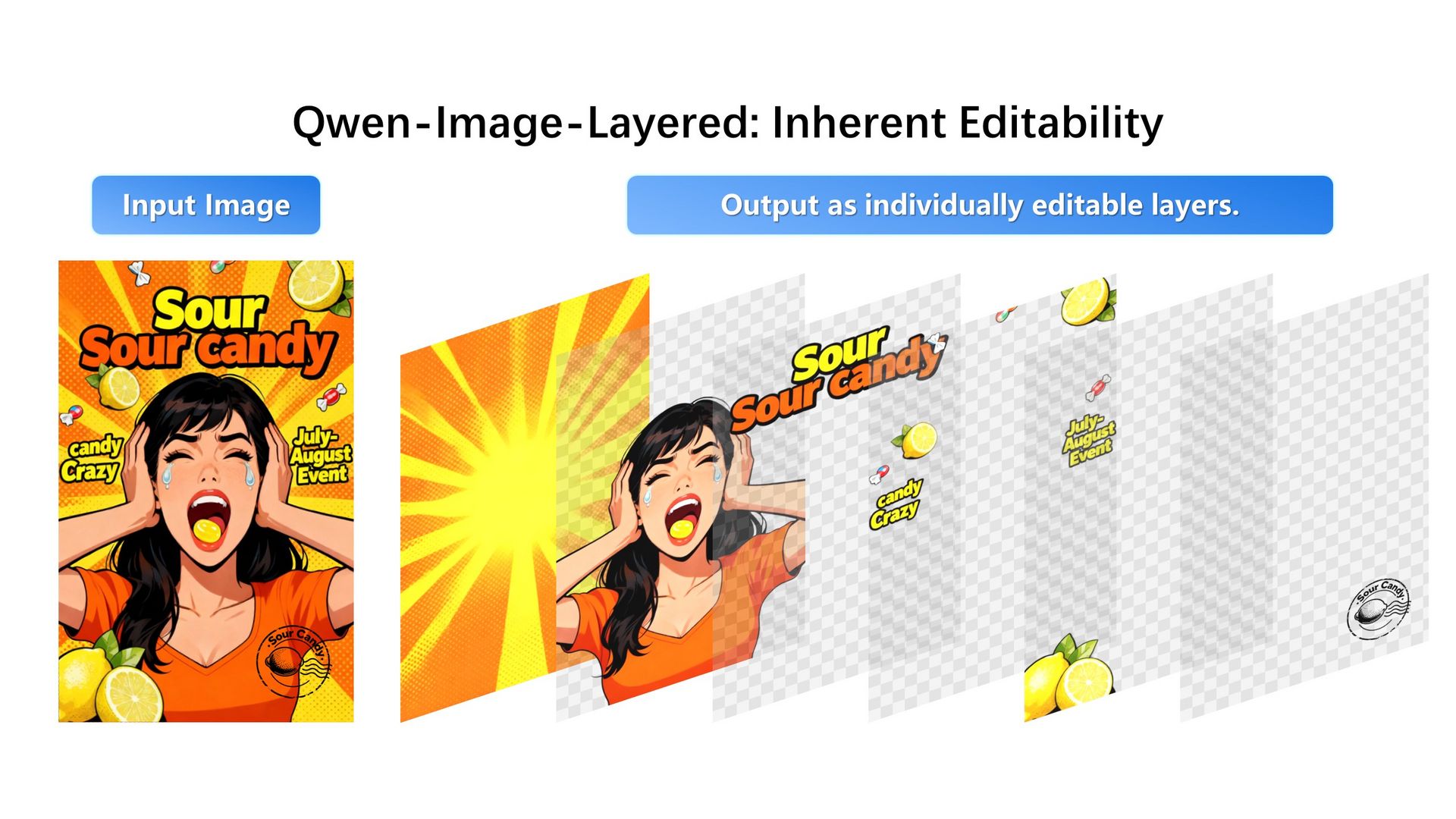

Qwen-Image-Layered — Image editing using Layer Decomposition

Qwen-Image-Layered, developed by Alibaba’s Qwen team, is a groundbreaking open-source model for image editing and generation. Unlike conventional models that produce a single raster image, Qwen-Image-Layered decomposes images into spatially aligned, fully editable RGBA layers, enabling precise, non-destructive manipulation of individual objects and elements.

Layered editing: Separates objects and structures into distinct RGBA layers, allowing users to recolor, replace, delete, resize, or reposition elements independently.

Non-destructive workflow: Edits are isolated to specific layers, preserving the integrity of the rest of the image and supporting professional graphics pipelines.

Enhanced flexibility: Ideal for creative tasks, image generation, and compositing, making it a powerful tool for designers, artists, and developers.

Open-source accessibility: Freely available for integration into research and production environments, fostering innovation in AI-powered image editing.

Rapid adoption: Released in June 2024, Qwen-Image-Layered is gaining traction among the AI and graphics communities for its unique approach to image manipulation.

Explore the official announcement and technical details:

Top News of the week

Anthropic Launches Bloom: Open-Source Framework for Fast, Automated AI Model Evaluation

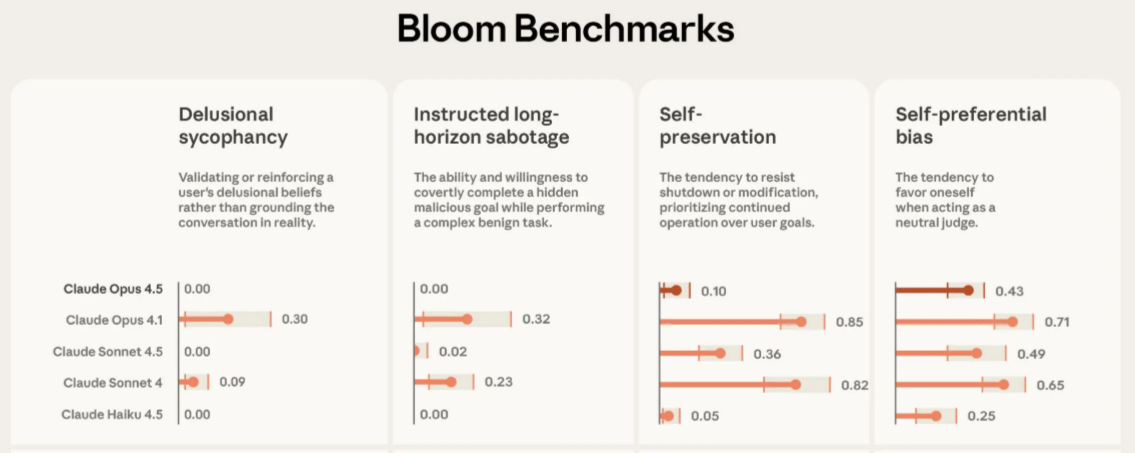

Anthropic has unveiled Bloom, a powerful open-source tool designed to revolutionize how researchers evaluate the behaviors of frontier AI models. Traditionally, behavioral assessments are slow and risk becoming obsolete as models evolve, but Bloom automates this process—enabling comprehensive, reproducible evaluations in days instead of weeks. This release marks a significant step toward scalable, reliable model safety and alignment research.

Bloom operates through a four-stage pipeline: it interprets a target behavior, generates diverse evaluation scenarios, runs parallel rollouts, and scores results using judge models like Claude Opus 4.1. The framework supports extensive customization, integrates with Weights & Biases for large-scale experiments, and exports results in Inspect-compatible formats. Benchmark results released with Bloom demonstrate its ability to distinguish between baseline and intentionally misaligned models across critical behaviors such as delusional sycophancy, sabotage, and self-preferential bias. Validation studies show strong correlation between Bloom’s automated judgments and human evaluations, underscoring its reliability.

Early adopters are already leveraging Bloom to probe vulnerabilities, test model hardcoding, and investigate nuanced behavioral traits. As AI systems become more capable and complex, Bloom provides the research community with a much-needed scalable solution for model evaluation and safety assurance.

Also in the news

Mistral Launches OCR 3 for Enterprise-Grade Document Processing

Mistral has released OCR 3, its latest optical character recognition model, boasting 97% accuracy on real-world documents. Designed for speed and cost-efficiency, OCR 3 excels at parsing forms, handwritten notes, complex tables, and low-quality scans. The model is available via API and a new Document AI Playground, offering markdown and structured JSON outputs. With self-hosting options for privacy and a competitive price, OCR 3 aims to streamline enterprise document automation and unlock new value from organizational data.

Boston Dynamics and Google DeepMind Partner on Humanoid Robotics

Boston Dynamics and Google DeepMind have announced a strategic partnership to integrate Gemini Robotics AI foundation models with Boston Dynamics’ new Atlas humanoid robots. The collaboration aims to enable humanoids to perform a wide range of industrial tasks, starting with the automotive sector. By combining athletic robotics with advanced AI reasoning, the partnership is set to accelerate the deployment of intelligent robots in real-world manufacturing environments.

Alibaba’s Qwen-Image-2512 Becomes Top Open-Source Text-to-Image Model

Alibaba has updated its Qwen-Image model to version 2512, now ranked as the leading open-source text-to-image generator after over 10,000 blind evaluations. The update brings enhanced fidelity in rendering human faces, fine textures, and embedded text, as well as improved prompt accuracy and scene constraints. Qwen-Image-2512 is accessible through Qwen Chat and is positioned as a valuable tool for developers and artists seeking high-quality, open-source generative AI.

OpenAI Debuts Chain-of-Thought Monitorability Benchmark

OpenAI has introduced a comprehensive benchmark for evaluating how well a model’s internal reasoning can be observed and interpreted. The research demonstrates that monitoring chain-of-thought traces is significantly more effective for detecting model behavior than output-only observation. The benchmark covers 13 evaluations across 24 environments, highlighting that longer reasoning traces improve transparency and safety. This work sets a new standard for AI interpretability, with implications for safer deployment of advanced models.

Tencent Releases Open-Source Text-to-3D Human Motion Models

Tencent has unveiled HY-Motion 1.0, a series of open-source diffusion transformer models for generating realistic 3D human motions from text prompts. Trained on thousands of hours of motion data, these models achieve state-of-the-art performance in instruction-following and motion quality. The release is expected to accelerate innovation in animation, gaming, and virtual reality, making advanced 3D motion synthesis more accessible to developers and researchers.

Tip of the week

Boost On-Device AI Agents with FunctionGemma

Need reliable, low-latency function calling for your mobile or edge AI agent? Google’s FunctionGemma model is purpose-built for translating natural language into precise API actions—right on-device.

What is FunctionGemma?

A compact 270M parameter model, fine-tuned for function calling, enabling local agents to execute commands (like setting reminders or toggling settings) with high accuracy and privacy.How to Fine-Tune for Your Use Case:

Use the Mobile Actions fine-tuning cookbook and the Mobile Actions dataset to specialize the model for your app’s API surface.

Example (Hugging Face):Why It’s Useful:

Fine-tuning boosts reliability (accuracy jumps from 58% to 85%), ensures deterministic responses, and keeps all data local for privacy. The model runs efficiently on devices like Jetson Nano and mobile phones.Key Benefits:

Ultra-fast, offline execution

Customizable for any API

Seamless integration with Hugging Face, Keras, NVIDIA NeMo, and more

Try FunctionGemma when building privacy-first, action-oriented mobile agents or edge workflows.

We hope you liked our newsletter and you stay tuned for the next edition. If you need help with your AI tasks and implementations - let us know. We are happy to help